Using kaggle datasets in Google Colaboratory notebook

Almost every data science aspirant uses Kaggle. It houses datasets for every domain. You can get a dataset for every possible use case ranging from the entertainment industry, medical, e-commerce, and even astronomy. Its users practice on various datasets to test out their skills in the field of Data Science and Machine learning.

The Kaggle datasets can have varying sizes. Some datasets can be as small as under 1MB and as large as 100 GB. Also, some of the Deep learning practices require GPU support that can boost the training time. Google Colab is a promising platform that can help beginners to test out their code in the cloud environment.

Step 1: Download API from your profile

Create new API key

Step 2 : Open google colab --> new notebook

Step 3 : Download Kaggle.json file.

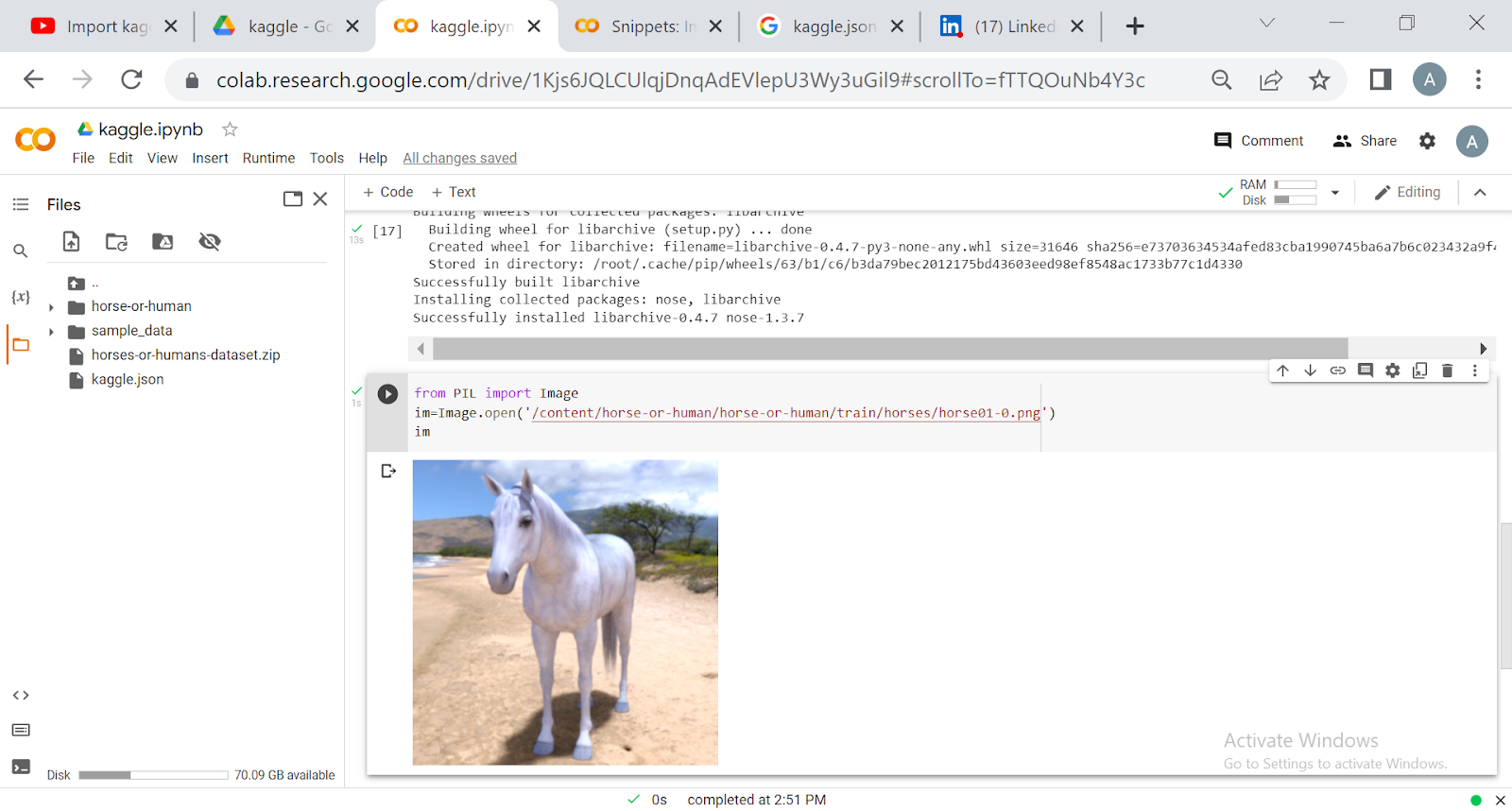

Step 4 : Execute following commands

1. !pip install kaggle

2. from google.colab import files

files.upload()

3. #create a new folder

Comments

Post a Comment